Anybody else feeling that the amount of new things to learn has gotten out of control?

Yes, I do. Maybe I'm just getting old?

About 25 years ago, I was a C++ expert. I had a copy of the ANSI standard printed out in a binder, and I was our group's official language lawyer, because I knew where in the binder to find each language issue. I also knew Java, to the point that I put corrections into Java books. By coincidence, I ran into the author of one of the O'Reilly books about Posix (their kid and my kid happened to play in the same high school band), and it turned out our level of knowledge about Posix standards was comparable. So I was pretty much an expert.

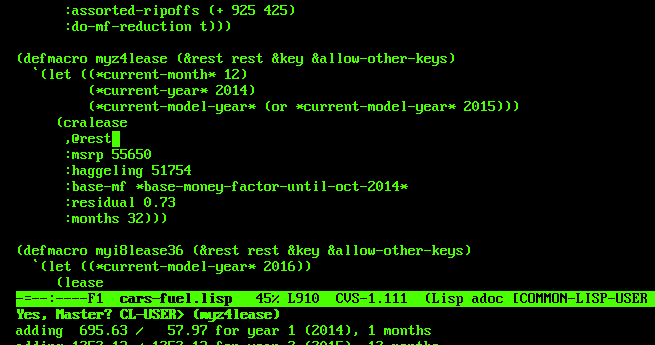

Fast forward 25 years. I got hired by a big FAANG company that programs in C++ 17 (and later in C++ 20). I had spent the intervening time working in an old-fashioned company programming in C++ of the ~1998 level. And I discovered that I didn't even know how to read and understand modern C++ code, much less write it. OK, spent a few weeks worth of evenings on training classes, and at least I was able to read it. I even checked out a dedicated textbook about "C++ move semantics" from the library. Yes, such a book exists! It taught me that C++ has become so ridiculously complicated, I do not WISH TO program in it, even for the few months that I had the required skills. Was the problem that I got old and stupid? Perhaps, but the complexity of C++ has increased to a ridiculous amount.

Then I got thrown into a briar patch of modern Java code. I thought I knew Java: I programmed in it from about 1994 to 2000, intensely. Turns out the stuff I knew was correct, but completely unidiomatic. For some reason, the common Java style took the "Gang of Four patterns book" and inhaled the whole thing (there's a joke about Bill Clinton in there). While technically the code I had to read and fix was still Java, it was really all about patterns. So now I was faced with forgetting everything I knew about style, and re-learn it.

In my opinion, part of the problem is that my knowledge (and to a lesser extent yours, you are much younger) is simply dated and has not been refreshed. That's because the day has only so many hours, and I can't spend a few hours every day on C++ or Java programming just to stay "up to date", instead I need to do my day job and get paid. But as you point out, part of the problem is that languages have become much more complex. Both the language definition (C++ is the perfect example here), and the style (Java is my example).

What is the cause of this? That's a tough one. Part of it is that C was a really crappy language, which barely worked for systems (kernel) development, but at least it was better than the only alternative at the time: assembly. C++ was a pus-covered band aid put on top of C to make programming large software projects more rational (that's a dirty joke about Grady Booch, the person who has done more damage to software engineering than any other, perhaps excluding RMS). But it didn't fix the basic problems of C, which are memory management, string management, and memory management (there is an old joke about Alzheimer's in that sentence). Matter-of-fact, by allowing us to write more "sensible code" on a weak foundation, it made software development harder: We were now using C++ for million line projects, but the basic language was designed or 10K line projects (such as the Unix kernel, or awk or Postgres). And to deal with those hyper-large systems (remember when Mentor or Cadence tried to compile 10M line C++ artifacts!), we had to add all the complexity to C++ to make it barely work. And we've kicking that dead whale down the beach, and every 2-3 years it gets even bigger and deader.

Then came Java, and the idea of fixing it all. Started out nice. Turned into a huge political/corporate/economic nightmare (remember Scott McNealy suggesting that killing Bill Gates would be a good idea, since the inheritance tax on his riches would pay for the federal deficit). And again, language and libraries and style grew out of control.

Now you add that the problem that the whole concept of "OS" has failed. The idea behind an OS was to virtualize a real computer, and allow multiple users and multiple processes to share it. Several people can log in, and each of them can run several programs at once. I remember using computers where that was NOT possible, so having an OS (like VMS, Unix, or MVS/TSO) was a real step forward. But it turns out we utterly failed at it. Instead of having an OS, we had many. We needed VM, so we can run multiple different OSes. What happened to the idea of portable software, or "compile once, run anywhere"? And then we discovered that even if everyone wants to run the same OS on the hardware, the version control problem is unsolvable. So we gave up on portable software and version interoperability, and instead went to Docker / Flatpack / Kubernetes / Jails / and so on: Every program comes with a complete copy of the OS and all libraries. You are probably old enough to remember Rob Pike's paper "Systems Research is Dead". Not only was he right, we are dancing on the grave of systems in production. In the old days, we took a 100 line C program and compiled it, and ran it. If we were good (and I was!), we write it such that it would compile on any POSIX compliant system. Today we wrap that 100 line C program into a multi-gigabyte MPM, which requires Rabbit and Rapid to build (I'm giving you clues about which environment I worked in), and then we schedule that MPM to be run by Borg (which was designed by 10 PhDs with degrees in econometrics, and figuring out the scheduling algorithm requires one to have that PhD). I thought this was the stupidest thing ever ... until my colleagues taught me that a 10-liner in Python (an interpreted language that doesn't require compilation, nor libraries!) also needs to be turned in a multi-gigabyte MPM and scheduled through Borg to actually run. No, I'm not allowed to do "./quick_test.py" from the command line.

So explain to me again: Why do I need to learn everything about Docker and Kubernetes, to run 100 lines of C or 10 lines of Python?

-----------------------

Sorry about this post. It's an example of "old man yells at clouds".