Hello,

I have a small FreeBSD server (an old Intel Xeon e1200 with 8GB DDR3) at my home that serve as a SAMBA Server.

Is configured with 4 disks in RAID10 using ZFS. Everything works fine, I use this setup for over 1year.

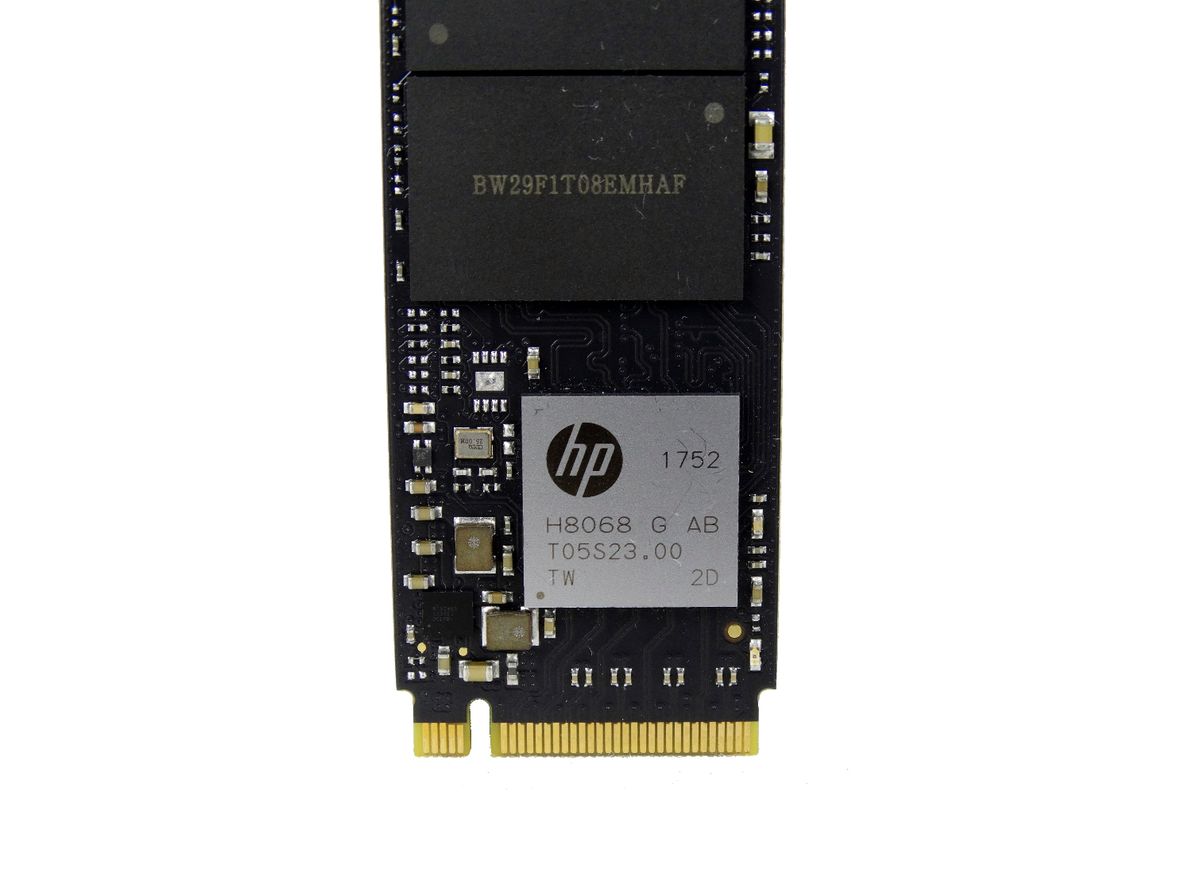

Recently I bought a PCI-E NVME M.2 ADAPTER + HP SSD EX900 M.2 128GB for using as a cache disk on ZFS.

OS: FreeBSD 13.1

The problem is that my card / ssd is not detected.

camcontrol devlist return:

Does the bios needs to be mandatory UEFI?

any idea?

Thanks!

I have a small FreeBSD server (an old Intel Xeon e1200 with 8GB DDR3) at my home that serve as a SAMBA Server.

Is configured with 4 disks in RAID10 using ZFS. Everything works fine, I use this setup for over 1year.

Recently I bought a PCI-E NVME M.2 ADAPTER + HP SSD EX900 M.2 128GB for using as a cache disk on ZFS.

OS: FreeBSD 13.1

The problem is that my card / ssd is not detected.

Code:

nvmecontrol devlist

No NVMe controllers found.camcontrol devlist return:

Code:

camcontrol devlist

<ST2000DM008-2FR102 0001> at scbus0 target 0 lun 0 (pass0,ada0)

<ST2000DM008-2FR102 0001> at scbus1 target 0 lun 0 (pass1,ada1)

<ST2000DM008-2FR102 0001> at scbus2 target 0 lun 0 (pass2,ada2)

<ST2000DM008-2FR102 0001> at scbus3 target 0 lun 0 (pass3,ada3)

<TOSHIBA DT01ACA200 MX4OABB0> at scbus4 target 0 lun 0 (pass4,ada4)

<AHCI SGPIO Enclosure 2.00 0001> at scbus5 target 0 lun 0 (pass5,ses0)Does the bios needs to be mandatory UEFI?

any idea?

Thanks!